VSCode插件: Continue

Ollama

1 | curl -fsSL https://ollama.com/install.sh | sh |

我是在服务器上安装的,为了支持远程访问,需要修改下配置文件。

1 | sudo vim /etc/systemd/system/ollama.service |

ollama所有可用模型:https://ollama.com/library

1 | # 重启服务 |

同时部署多个模型 [可选]

1 | # 默认端口为11434, 这里额外部署一个11435的服务 |

Continue

在vscode的插件库上安装Continue插件。

在

config.json中添加模型配置文件。如果使用本地模型,可以注释掉

"apiBase": "http://your_server_ip:11435",如果使用的是默认的ollama服务,端口11435改为11434。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56"models": [

{

"title": "Codellama 7b",

"provider": "ollama",

"model": "codellama:7b",

"apiBase": "http://your_server_ip:11435"

},

{

"title": "Codellama 13b",

"provider": "ollama",

"model": "codellama:13b",

"apiBase": "http://your_server_ip:11435"

},

{

"title": "Codellama 34b",

"provider": "ollama",

"model": "codellama:34b",

"apiBase": "http://your_server_ip:11435"

},

{

"title": "StarCoder2 3b",

"provider": "ollama",

"model": "starcoder2:3b",

"apiBase": "http://node1:11435"

},

{

"title": "StarCoder2 7b",

"provider": "ollama",

"model": "starcoder2:7b",

"apiBase": "http://node1:11435"

},

{

"title": "starcoder2:15b",

"provider": "ollama",

"model": "starcoder2:15b",

"apiBase": "http://node1:11435"

},

{

"title": "Llama2 7b",

"provider": "ollama",

"model": "llama2:7b",

"apiBase": "http://your_server_ip:11435"

},

{

"title": "Llama2 13b",

"provider": "ollama",

"model": "llama2:13b",

"apiBase": "http://your_server_ip:11435"

},

{

"title": "Llama2 70b",

"provider": "ollama",

"model": "llama2:70b",

"apiBase": "http://your_server_ip:11435"

}

],

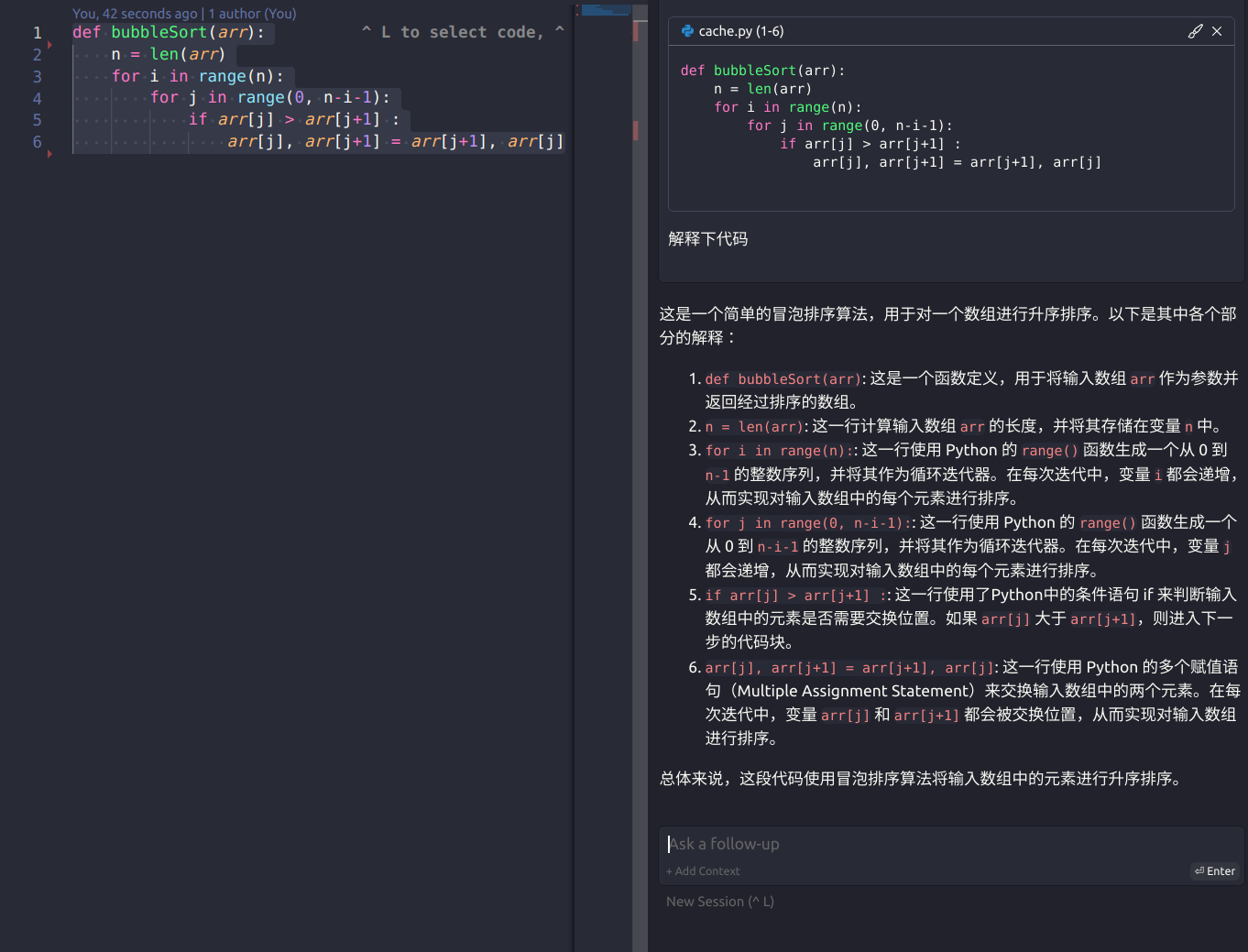

效果

Ctrl + L选中代码,弹出聊天窗口,可以直接询问LLM,例如编写单元测试,检查bug。

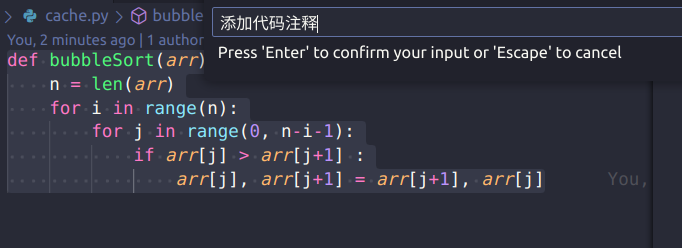

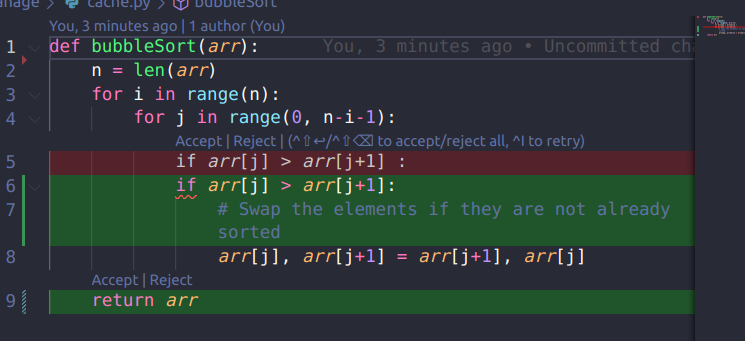

Ctrl + I插入代码,弹出prompt输入框,根据需求生成代码。

VSCode插件: Continue